Published

- Introduction: What is the CLT and why should you care?

- Intuition: Understanding why the CLT makes sense

- Proof: Using Fourier transforms to prove the CLT

Introduction to the Central Limit Theorem

The central limit theorem is incredibly useful, because it allows us to make meaningful predictions about a population, just by taking a sample.

Imagine you are at the mall one day and you see people lined up at a "magic money machine". One person press the magic button and $88 comes out! The second person presses but this time only $9 comes out. The third gets $64...

Sounds pretty good, right? But there's hundreds of people lined up already, and you decide it's only worth the wait if the average payout is least $50.

But what can you really know about the true mean payout by only observing a sample?

Press the button below as many times as you like, and watch how the estimate becomes more precise as the sample size increases.

MAGIC MONEY MACHINE

n = 0

Sample mean:

x̄ = ---

Sample SD:

s = ---

You may notice that the confidence interval estimate relies on a normal distribution. But you never assumed the actual population data were normally distributed. In fact, the money machine distribution is not normal, which you will notice if you take a large enough sample.

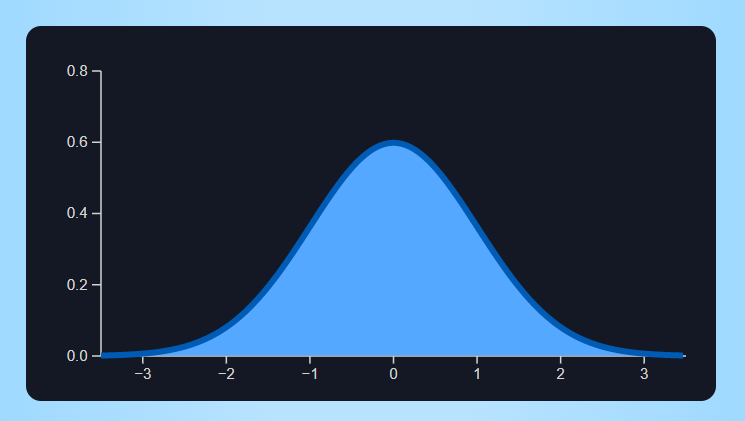

The normal / Gaussian distribution is the famous bell-shaped curve with probability density function:

This link is to another article where I discuss "how the bell curve gets its shape".

That is the power of the central limit theorem.

Roughly speaking, the theorem allows you to use a normally distributed random variable to model the sample mean. This approximation becomes more and more accurate as your sample size increases. We do need to assume that selections are "independent" (one outcome from the money machine does not affect the next outcome) and "identically distributed" (the random selection process used is the same each time).

The CLT also tells us that:

- the mean of the sampling distribution will approach the population mean (μ)

- the standard deviation of the sampling distribution will approach the population standard deviation divided by the square root of the sample size (σ ÷ √n)

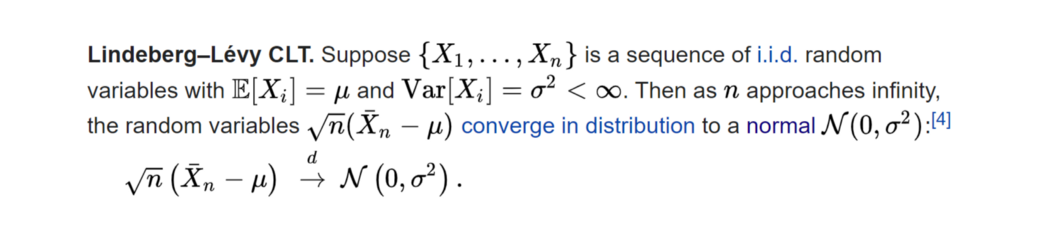

Formal statement of the CLT

It is not at all obvious why the sample mean for a non-normal distribution should converge to a normal distribution. The next section contains a series of animations to give some intuition as to why it occurs.

Intuition for the Central Limit Theorem

Let's consider a similar but simpler example. Imagine a normal 6-sided die, where the numbers 5 and 6 have been replaced by numbers 1 and 1. This die now has a 3/6 chance of showing a 1, and a 1/6 chance of showing each of 2, 3 and 4.

If you like the "money machine" example, you can think of it as a money machine where you have a 3/6 chance of getting $1, and a 1/6 chance of getting $2, $3 or $4.

The probability distribution for the dice is shown below. It is our population distribution. The population mean (highlighted in yellow) is equal to 2, because (1 + 1 + 1 + 2 + 3 + 4) ÷ 6 = 2.

Now think about how we can generate the histogram for the mean of two dice… If the first die is a 1, the possible outcomes are (1,1), (1,2), (1,3) and (1,4). So we distribute the first bar in the histogram across these outcomes, which have sample means of 1, 1.5, 2 and 2.5 respectively. (1,1) has the largest probability of 1/2, while (1,2), (1,3) and (1,4) each have a probability of 1/6.

Click the "convolve" button below to see it happen.

Because the mean of the second die is 2, the outcomes (1,2), (2,2), (3,2) and (4,2) have been highlighted. Notice how these highlighted bars have moved exactly halfway towards the population mean. For example, given that the first die was a 4, the "expected" outcome (4,2) now has a sample mean of (4+2) ÷ 2 = 3.

And if you are wondering what the word "convolve" means... a “convolution” is the distribution obtained when we sum two random variables. In this case, we are taking the convolution of the dice distribution with itself (then dividing by 2 to get the mean).

Convolution of discrete random variables

The general formula for the distribution of the sum of two independent discrete random variables is:

For example, the probability that the mean of the first two dice is 2 is found by calculating:

But I think the visual demonstration gives us more insight than the calculation. Press the "convolve" button again to see the convolution of the 2-dice distribution with itself.

We can see that during the convolution, each bar is broked down into a scaled-down copy of the original distribution. Its total height is equal to the height of the original bar. Its width is half the width of the original distribution, and its mean is halfway between the original x value and the population mean of 2.

These scaled-down distributions are then piled on top of each other to form the convolution. Values close to the mean are within range of more of these mini-distributions, which is why the convolution tends to cluster more around the mean.

The leftmost bar cannot move further left, and at least part of it must move to the right. Similar for the rightmost bar. So the extreme values (in this case 1 and 4) become less and less likely, and it makes sense that in the limit their probabilities will approach zero.

The increasing symmetry also starts to makes sense when we realise that with each convolution, the bars furthest away from the mean move the furthest towards the mean.

The animation below lets you repeat the convolution process up to a sample size of n = 32.

Note that after the convolution, the total height of all the bars remains constant (as the total probability is 1). The convoluted distribution appears to decrease in height, because there are twice as many bars (with half the width). So we rescale the y-axis after each convolution.

I hope the visualisation above gives you a sense of why the limiting distribution should be symmetrical and bell shaped, regardless of the original distribution. But this specific visualisation can only really be used for discrete random variables. It's fine if we model a continuous variable using a discrete approximation. For example, if we were modelling height, we could just round off all the heights to the nearest millimetre and use a discrete model.

Below we apply the discrete method to estimate the convolutions for a continuous random variable.

But in the true continuous case, where each value has probability zero and the probability over an interval is represented by an area, the process of "redistributing bars" no longer applies. The convolution of a continuous random variable with itself is given by the integral

There is a proof of the central limit theorem for continuous random variables below, but I will make one more comment which belongs in the "intuition" section...

The fact that the normal distribution appears here is less surprising when we think about how the normal distribution was derived in the first place. Gauss derived the normal (Gaussian) distribution to describe errors in astronomical measurements. One of his key assumptions was that given "several measurements of the same quantity, the most likely value of the quantity being measured is their average." (Stahl 2006)

Reflecting on this assumption, we see that the connection between sample means and the normal distribution has been "baked in" from the start!

Proof of the Central Limit Theorem

We have n independent and identical random variables to . The sample mean is given by

The sample mean will converge to the population mean as n→∞ (this is the law of large numbers). So the random variable will converge to zero.

It turns out that the rate of convergence is , so multiplying by a factor of results in a random variable that converges to a limiting distribution. We will show that this limiting distribution is a normal distribution with mean zero and variance .

The method shown here is to prove that the Fourier transforms of both distributions are equal.

The Fourier transform of a continuous function g is:

If you have not seen it already, you really should take a look at this article and video on Fourier transforms by 3Blue1Brown.

Let's start with the right hand side, the normal distribution with density function

g(x)=

The Gaussian functions have the unique property that the Fourier transform of a Gaussian is itself another Gaussian. To see why, let's take the derivative of both sides:

This is essentially the differential equation that Gauss obtained in order to define the Gaussian distribution.

To show that the Fourier transform satisfies a similar differential equation (with a different constant), let's start by taking the Fourier transform of the left hand side.

Now we can see that the right hand side is the same as the Fourier transform of the Gaussian differential equation above, just multiplied by a factor of . Therefore it has also a solution of a similar form, which is

This special relationship between Fourier transforms and Gaussians is not the only reason that Fourier transforms are useful in this proof. Applying a Fourier transform to the sample mean also has the nice property that it transforms the convolution operation into a multiplication.

Let's say the Xi are continuous random variables, and let each centered random variable have density function given by fx (they must all have same distribution because we assumed they are "identical"). The distribution for the sum of the random variables is then given by their convolution (denoted with a ∗):

When we take a Fourier transform of this convolution, we will obtain a product of n Fourier transforms. To see why this occurs, let's first consider the Fourier transform of the convolution of two functions g=f∗f:

We can reverse the order of integration:

Then use a linear substitution u=t−y in the inner integral to rewrite it as a Fourier transform:

This inner Fourier transform does not depend on y, so we have

This result can be extended to take the Fourier transform of the convolution of n functions that make up , each of which has density function . Note that the factor of also appears outside the probability density function to preserve the total area of 1.

Applying the "time-scaling" property, the Fourier transform of becomes , then the Fourier transform of the convolution is:

Here comes the key part of the proof...

We can expand the exponential term using the Taylor series to give a series of integrals:

Analysing this series term by term:

- The first term equals 1, because the area under the PDF must be 1.

- The second term is a multiple of the mean , which is zero as we already centered the random variable by subtracting μ.

- The third term is a multiple of , which is just because the mean is zero.

- The sum of all subsequent terms (...) approaches zero faster than .

Now using Euler's limit definition for , we have

which is the same as the Fourier transform of the normal distribution as shown above. As the Fourier transform is bijective (one-to-one) this completes the proof!